- Testing distributed systems : Curated list of resources on testing distributed systems. Thre is no silver bullet, just sweat, blood and broken systems.

- The Question of Quantum Supremacy : Google folks are trying to determine the smaller smallest computational task that is prohibitively hard for today’s classical computers but trivial for quantum computer. This is the equivalent of hello world for a quantum computer and is critical to validate quantum computer capability and correctness.

- Archi : open source modelling tool to create ArchiMate models and sketches. If you ever look at TOGAF or use the enterprise architecture principle, this tool is for you.

A blog about life, Engineering, Business, Research, and everything else (especially everything else)

Thursday, May 31, 2018

[Links of the Day] 31/05/2018 : Testing Distributed Systems, Quantum Supremacy , Togaf Tool

Labels:

Distributed systems

,

enterprise architecture

,

links of the day

,

quantum

,

testing

,

togaf

Wednesday, May 30, 2018

The curse of low-tech Scrum

I recently read the following article that describes how scrum disempowers devs. It criticises the "sell books and consulting" aspect that seems to have become the primary driver behind the Agile mantra. Sadly, I strongly agree with the authors' view.

Scrum brings some excellent value to the technical development process such as :

- Sprints offer a better way to organise than Waterfalls.

- Force to ship functional products as frequently as possible to get feedback early and often from the end user.

- Requires stopping what you're doing on a regular basis to evaluate progress and problems.

However, Scrum quickly spread within the tech world as a way for companies to be "agile" without too much structural change. First, Scrum does not require technical practices and can be installed in place at existing waterfall companies doing what is effectively mini-waterfall. Second, such deployment generates little disruption to the corporate hierarchy (and this is the crux of the issue). As a result, Scrum allows managers and executives to feel like something is being done without disturbing the power hierarchy.

Even though the method talks about being flexible and adapting when there are real business needs to adjust to. The higher level of corporation rarely adjusts this approach which relegates scrum to allow companies to move marginally in the direction of agility and declare "mission accomplished". Agile providing a low-tech placebo solution to an organisational aspiration.

Last but not least adopting a methodology for the sake of it is often doomed to fail. If you have a customer that needs a new thing built by a specific date. Then scrum is less than ideal as it requires the flexible date and profoundly involved customer stakeholders in the process. The waterfall approach would be a better choice as it forces to define the project up front and allows for calling out changes to the plan and thus changes the scope.

It is often disappointing to see claims by consulting firms that organisation needs to adopt agile. It's a piecemeal solution that will only temporary mask deeper organisational problems without the required correct structural change. It's not because your dev teams started to use agile or devops that your organisation as a whole suddenly became agiler.

Don't misunderstand this blog post as a complete rejection of the principle of scrum and agile. It's not. The core ideas are awesome and should be adopted where it suits. Other methodologies such as waterfall, devops, etc.. have also their place in an organisation depending on the lifecycle stage of the products. However, these need to be adopted alongside organisational change beyond the devs teams to improve the overall operations and efficiency of the company. Without these, it's just a low-tech placebo.

Tuesday, May 29, 2018

[Links of the Day] 29/05/2018 : Tracers performance, Testing Terraform , Virtual-kubelet

- Benchmarking kernel and userspace tracers : a good recap of what tracing toolkit is out there and the performance tradeoff that comes with them

- terratest : this is the thing I was looking for, a way to test and validate my terraform script. This will really help the adoption of Terraform I think as it will significantly increase the confidence in Terraform code before deployment.

- Virtual-Kubelet : that s a really cool concept, and introduce a great dose of flexibility in your Kubernetes cluster deployment. There is already some really exciting solution leveraging it such as the AWS fargate integration. With this, you could implement easily bursting and batching solution or real hybrid k8s solution with virtual kubelet hosted in Azure, Aws and on your private cloud.

Labels:

infrastructure

,

Kubernetes

,

links of the day

,

terraform

,

testing

,

tracing

Thursday, May 24, 2018

[Links of the day] 24/05/2018 : TLA+ video course, Pdf Generator, Quantum Algorithms Overview

- The TLA+ Video Course : if you ever had to design a distributed system and spend the sleepless night thinking about edge cases TLA+ is a godsend. You just spec your system & go. It also gives a huge decrease in cognitive load when you're implementing your system against a TLA+ spec. The hard stuff is already done. You can just glance at the spec to see what preconditions must be checked before an action is performed. No pausing halfway through writing a function as you suddenly think of an obscure sequence of events that breaks your code.

- ReLaXed : generate PDF from HTML. It supports for Markdown, LaTeX-style mathematical equations, CSV conversion to HTML tables, plot generation, and diagram generation. Many more features can be added simply by importing an existing JavaScript or CSS framework.

- Quantum algorithms: an overview : survey some known quantum algorithms, with an emphasis on a broad overview of their applications.

Labels:

algorithm

,

Distributed systems

,

links of the day

,

pdf

,

quantum

,

tla

,

verification

Monday, May 21, 2018

[Links of the Day] 21/05/2018 : Automation and Make, FoundationDB, Usenix NSDI18

- Automation and Make : this is a really good description of best practice for Makefile and automation.

- FoundationDB : Apple open source it's distributed DB system, another contender enters the fray. With Spanner on google cloud, CockroachDB and now FoundationDB. The Highly resilient distributed transactional system start to reach widespread usage. [Github]

- Usenix NSDI 2018 Notes: a very good overview of NSDI conference, and naturally the morning paper is currently doing a more in-depth analysis of the main papers. [day 2&3]

Labels:

apple

,

conference

,

database

,

links of the day

,

usenix

Friday, May 18, 2018

Hedging GDPR with Edge Computing

Cloud has drastically changed the way companies deal with data as well as compute resource. It is no more constraint by tedious and long procurement process and offers unparalleled flexibility. The next wave of change is currently taking shape. A combination of serverless solution offering ever more flexibility coupled with more significant financial control and at at the edge, where the amount of data, the complexity of applications are driving requirements for local options.

IoT and AR/VR are the two obvious applications driving enterprise to the edge because of their use of complicated and expensive solution coupled with humongous performance requirement such as extra low latency with no jitters.

However other reasons behind edge computing start to emerge and will probably attract more traditional enterprise because of the advantage conferred by the ultra localisation of data and compute solutions.

GDPR has the potential to accelerate edge computing adoption. Edge Computing can offer hyper localisation of data storage as well as processing. These features ticks many boxes of the regulatory requirements. With the boom in personal data being generated via the ever-increasing number of consumer devices, like smart watches, smart cars and homes, there are the ever-looming potentials, for a company, to expose themselves to GDPR infractions. Not to mention data ownership, and responsibility can also be a tricky question to answer, for example, who is responsible for the data – the consumer, the watch provider or the vendor?

One of the solutions delivered by edge computing would be to store and process data onsite within the local premise or a delimited geographical perimeter. It would not only offers greater access and guaranteed control. But also enable hyper localisation and regulatory compliance.

Hence, there is a significant potential market for future Edge computing provider to offer robust regulatory and compliance solution. Look at gaming servers and underage data protection, HR or healthcare information. There is a vast trove of customers that will now see a way of leveraging cloud-like models while maintaining tight geographical and regulatory constraints. One potential would be to offer a form of reverse take over, or merger: Edge computing providers would be invited to leverage existing on-premise infrastructure and turn them into cloud-like serverless solution with strong compliance out of the box. It will allow companies to benefit at low cost from cloud-like flexibility while offering robust regulatory compliance via explicitly exposing and constraining storage and compute operations to specific locations.

Last but not least edge computing providers will be able to facilitate access to local data or processing capability on demand to third parties while having the capability to enforce robust compliance. Opening an entirely new market for market compliant brokerage. By example, customers can allow access to data or extract metadata from the vendor back to the watch provider or its own medical insurance company. All these interactions being mediated (and charged) by the edge computing provider.

By becoming the custodian of data at the edge, Edge computing provider can build a two-sided market. Serving data generator, customers, individuals, organisations, aka data issuers and issuer processor one side. Also, on the other side, merchants, advertisement companies, insurance, etc... aka acquirers and acquirers processor. Edge computing provider would facilitate the transactions between issuer and acquirer while enabling hyper-local and compliant solutions. A little bit like visa but for data and compute.

Labels:

cloud

,

compliance

,

gdpr

,

regulation

,

serverless

Thursday, May 17, 2018

[Links of the Day] 17/05/2018 : Edge Computing and the Red Wedding problem, Vector Embedding utility , Scalability efficiency

- Towards a Solution to the Red Wedding Problem : interesting look at how to handle massive Read spike while being able to update (write spike ) the content at the same time. The authors propose to leverage edge computing to spread and limit the impact of a write-heavy spike in such network

- Magnitude : this is a really cool project for those out there dabbling with NLP and vector embedding. This package delivers a fast, efficient universal vector embedding utility.

- Scalability! But at what COST? : the authors of this paper introduce the concept of measuring the scalability performance of a solution by comparing it to the hardware configuration required before the platform outperforms a competent single-threaded implementation. As always, and often, most system and company do not need a monstrous cluster to satisfy their need. But it's always more glamorous to say: "we used a cluster" rather than: "I upgraded the RAM so the model can fit in memory".

Labels:

edge computing

,

efficiency

,

links of the day

,

NLP

,

papers

,

scalability

,

vector embedding

Monday, May 14, 2018

[Links of the Day] 14/05/2018 : Concurrency and Paxos resources, PostgreSQL + docker streaming replication

- PostDock : This is an interesting project, It aims are delivering a Postgres streaming replication cluster for any docker environment. Sprinkle this with Kubernetes config and you would end up with an RDS equivalent. Even if I still think that on a long run CockroachDB / spanner solution are probably better for cloud deployment.

- awesome-consensus : Awesome list for Paxos and friends

- Seven Concurrency Models in Seven Weeks : more concurrency stuff. Excellent (free) book looking at all the important stuff: Threads & locks, functional programming, separating identity & state, actors, sequential processes, data parallelism, and the lambda architecture.

Labels:

concurrency

,

consensus

,

docker

,

links of the day

,

paxos

,

postgres

,

replication

Thursday, May 10, 2018

[Links of the Day] 10/05/2018 : GDPR guide for devs , Gloo function gateway, HA SQL

- GDPR - a practical guide for developers : If you are wondering why you are getting so many emails notification regarding the update of Term of services. You need to read this. It's a rather simple explanation of what GDPR means and how it impacts developers. The followup discussion on Hacker News is also a must read as it expands and nuance the article.

- Gloo: Gloo is a function ( as in serverless ) proxy router. It is a Functions Gateway service that allows you to compose legacy and serverless services through a single platform. It's built on to of the envoy proxy from solo.io . On interesting bit is that it allow function level routing functionalities that are hard to achieve via standard API gateway such as fan out, canary etc.. [github]

- phxsql : Tencent high availability MySQL cluster. It aims at guaranteeing data consistency between a master and slaves using Paxos algorithm. This looks promising, however, I would really like to see how it behave using Jespen verification framework.

Labels:

function as a service

,

gdpr

,

high availability

,

links of the day

,

serverless

,

sql

Wednesday, May 09, 2018

A look at Google gVisor OCI runtime

Google released a new OCI container runtime: gVisor. This runtime aim at solving part of the security concerns associated with the current container technology stack. One of the big argument of the virtualisation crowd has always been that the lack of explicit partitioning and protection of resource can facilitate “leakage” from the containers to the host or other adjacent containers.

This stem from the historical evolution of containers in Linux. Linux has no such concept of native containers. Not like BSD with Jails or Solaris with Zones. Containers in Linux are the result of the gradual emergence of a stack of various security and isolation technology that was introduced in the Linux kernel. As a result, Linux ended up with a highly broad technology stack that can be separately turned on / off or tuned. However, there is no such thing as a pure sandbox solution. The classic jack of all trade curse, it’s a master of none solution.

The Docker runtime (Containerd) package all the Linux kernel isolation and security stack (namespaces, cgroup, capabilities, seccomp, apparmor, SELinux) into a neat solution that is easy to deploy and use.

It allows the user to restrict what the application can do such as which file it can access with which permission or limits resource consumption such as networks, disk I/O or CPU. It allows the applications to share resources without stepping on each other toes happily. Also, limits the risk any of their data being accessed while sitting on the same machine.

With a correct configuration ( the default one is quite reasonable ) will allow blocking anything that is not authorised and in principle protect from any leak from a malicious or badly coded piece of code running in the container.

However, you have to understand that Docker has some real limitation already. It has only limited support for user-namespace. The user-namespace allows applications to have UID 0 permission within the containers ( aka root ) while the containers and user running has a lower privilege level. As a result, each container would run under a different user ID without stepping on each other toes.

All of these features rely on the reliability and security (as in no bugs) of the Linux kernel. Most of Docker advanced feature relies on kernel features. And getting new features is a multi-year effort, it took a while for good resource isolation mechanism percolates from the first RFC to the stable branch by example. As a result, Docker and current container ecosystem are directly dependent on the Linux kernel update inertia as well as its code quality. While being excellent, no system is entirely free of bug, not to mention the eternal race for patching them when they are discovered and fixed.

Hence the idea is to, rather than having to share the kernel between all the containers, have one kernel per container. Explicitly limiting potential leakage, interference and reduce the attack surface. gVisor adopts this approach, which is not new as KataContainers already implemented something similar. Katacontainers is the result of the fusion of ClearContainer (intel) and runV (hyper). Katacontainers use KVM as a minimalistic kernel dedicated to the container runtime. But, you still need to manage the host machine to ensure fair resource sharing and their securitisation. This additional layer of indirection limits the attack surface as even if a kernel bug is discovered you will be challenged to exploit it to escape to another an adjacent container or underlying one as they are not shared.

gVisor can use a KVM as kernel; however, it was initially and is still primarily designed around ptrace. User Mode Linux already used the same technique, which is to start a process in userspace for the subsystem that will be running on top. Similarly to a hypervisor model used by virtual machines. All the system calls will be executed using the permission of the userspace process on behalf of the subsystem via an interception mechanism.

Now, how do you intercept these system calls which should be executed by the kernel? UML and gVisor divert ptrace primary goal ( which is debugging ) and inject a breakpoint in the executable code to intercept and stop for every system call execution. Once caught the new userspace kernel will execute the call on behalf the original process within userspace. It works well, but as you guessed, there is no free lunch. This method what heavily used by the first virtualisation solution. But rapidly, processor vendors realised that offering hardware-specific acceleration method would be highly beneficial ( and sell more at the same time).

KVM and other hypervisor leverage such accelerator. Now you even have AWS and Azure deploying completely dedicated coprocessor for handling virtualization related acceleration. Allowing VM to run almost that the same speed as a bare metal system.

And like Qemu leveraging KVM, gVisor also offer KVM as underlying runtime environment. However, there is significant work to be done to enable any container to run on to of it. While ptrace allow to directly leverage existing Linux stack, with KVM you need need to reimplement a good chunk of the system to make it work. Have a look at Qemu code to understand the complexity of the task. This is the reason behind the limited set of supported applications as not all syscalls are implemented yet.

As is, gVisor is probably not ready yet for production. However, this looks like a promising solution providing a middle ground between the Docker approach and the Virtualization one while taking some of the excellent ideas coming from the unikernel world. I hope that this technology gets picked up, and the KVM runtime becomes the default solution for gVisor. It will allow the system to benefit from a rock-solid hardware acceleration with all the paravirtualisation goodies such as virtio.

Labels:

container

,

docker

,

google

,

isolation

,

kernel

,

kvm

,

linux

,

performance

,

security

,

unikernel

,

virtualization

Monday, May 07, 2018

[Links of the Day] 07/05/2018 : Cryptocurrency Consensus Algorithms , Fast18 conference, Google 2 real world project translation

- A Hitchhiker’s Guide to Consensus Algorithms: this post provides a quick and easy way to understand the classification of the various cryptocurrency consensus models. It's a gentle introduction to the concept of proof of work vs proof of stakes vs proof of authority vs ... Well, you got it many many more algorithm.

- Notes from FAST18 : a very good overview of the Storage conference. What is becoming obvious over the years is that a lot of the actual practical implementation of novel distributed storage solution is directly pushed into Ceph. Ceph is poised to become the defacto default private storage solution even if it has a long way to go in term of manageability and automation. I think it stems from the preconception that a lot of operations need a storage admin person. But the projects like Helm are helping it get there.

- xg2xg : a practical translation table of internal google tech and similar technology available to those that do not work in the chocolate factory. It is a very good list of production-ready project that can be leveraged in many devops (and non-devops) environment.

Labels:

conference

,

consensus

,

cryptocurrency

,

google

,

links of the day

,

storage

Thursday, May 03, 2018

[Links of the Day] 03/05/2018 : Fundamental Values of Cryptocurrencies, Kafka SQL streaming engine, SaaS pricing

- Fundamental Values of Cryptocurrencies and Blockchain Technology : paper looking at the fundamental values of cryptocurrency. The authors propose fundamental models and framework to study the price of digital assets. It's an interesting approach, however, they limited their analysis to only two cryptocurrencies: Bitcoin and Ethereum. While there are loads of other cryptocurrencies out there that either derives from these two or are independent. By solely focusing on these two, there is a feeling that the authors are missing the bigger picture and especially the transient aspect of the cryptocurrency market.

- KSQL : this project offers a streaming SQL engine for Kafka. It basically allows you to use the Kafka stream engine with the common SQL model.

- SaaS Pricing : a real-life example of why the right SaaS pricing model is SOOO.. important. It can make or break your business. And you rarely have more than one shot at it.

Labels:

blockchain

,

cryptocurrency

,

kafka

,

links of the day

,

pricing

,

SaaS

,

sql

Wednesday, May 02, 2018

State of Intellectual Property protection, or lack of thereof, by cloud services providers

In 2015 AWS started making the news regarding its aggressive “Non-Assert Clause” in its terms of services. This type of clause exists to protect the cloud providers from being sued for patents, copyrighted works or trademarks infringement by their customers in perpetuity.

Now, most explanations given for the use of these type of clause tends to revolve around open source and patent troll defence. While patent troll defence is rather obvious, open source defence is slightly more tenuous but still valid. If Amazon uses your open source (in some way that is a violation of the license), and you use AWS, you can't sue them.

However, it became slightly more difficult to digest when such clause is used to fight a patent suit. Which indicate that any cloud provider using such clause could duplicate your products without fear of legal repercussion.

Fast forward a couple of years later, AWS start to feel a little bit more pressure from its competitor and drops its controversial clause in July 2017. This is perceived to be a move to woo the more traditional enterprise market that starts to adopt cloud deployment. The objective is to reassure more legally astute customers.

A month later AWS also decided to mimic to some extent Microsoft Azure patent protection scheme. While not as extensive as Azure offering this is still a start. Microsoft IP shared protection is far more comprehensive than AWS as it expands the company’s existing indemnification policy to include its patents portfolio available to Azure customers to help defend themselves from possible infringement suits; and pledges to Azure customers that if Microsoft sells patents to an NPE they can never be asserted against them.

The shared protection scheme is quite attractive as well as dangerous for the provider. If one customer falls prey to an IP lawsuit and loses because of a cloud provider infringement. This can become really quickly a complete bloodbath as every customer might become a target. Because of such scheme, the cost can easily snowball. As a result, I can only foresee the biggest player in the fields offering such protection.

I decided to do a quick check to see the current state of the cloud provider terms of services regarding IP protection or lack of thereof. I specifically focused on trying to pinpoint which one used the “Non-Assert Clause” and which one offer Share protection ( to varying degree) in the Table below.

Cloud Provider

|

Share protection

|

No Non-Assert Clause

|

Reference

|

AWS

|

🗸 (since Aug 2017)

|

🗸 ( since July 2017)

| |

Azure

|

🗸

|

🗸

| |

Google

|

🗸

|

🗸

| |

Digital Ocean

|

⤫

|

⤫

Clause 15.2

| |

Oracle

|

🗸

|

🗸

| |

SAP

|

⤫

|

⤫

Clause 10.3

| |

OVH (VmWare)

|

⤫

|

🗸

| |

Alibaba Cloud

|

⤫

|

🗸

|

Disclaimer: I am not a lawyer, and this is based on documents available at the time of publication. The terms can change at any time and you are free to try to negotiate terms with your cloud providers yourself. In any case, I recommend having a qualified lawyer to give you advice on this matter.

The result: Pretty much all cloud provider DO NOT use Non-Assert clause except two: Digital Ocean and SAP. While I would understand why Digital Ocean might do that based on their business model and market size. SAP seems a little bit more surprising in their aggressive stance. However, its customers tend to be more legal savvy and might negotiate the clause out more easily.

When it comes to shared protection scheme, half of the provider do not mention any shared protection scheme. As for the other half, your mileage may vary. Azure offer the most comprehensive one. While most of the others that do offer a protection tend to offer legal and/or financial support only.

It is clear that, as more institutional customer move to the cloud, providers will gradually need to offer more legal protection regarding intellectual property risks. However, this might come at a cost and risk that only the biggest one will be able to bear.

Tuesday, May 01, 2018

[Links of the Day] 01/05/2018 : CD over K8s , SQL query parser, NLP annotation tool

- Apollo : The logz.io continuous deployment solution over kubernetes

- Query parser : Open Source Tool for Parsing and Analyzing SQL. THis is really interesting tools for a specific use case. How do you map and understand the usage of databases and tables used in an organization that maintains hundred or thousands of systems without central coordination and architecture.

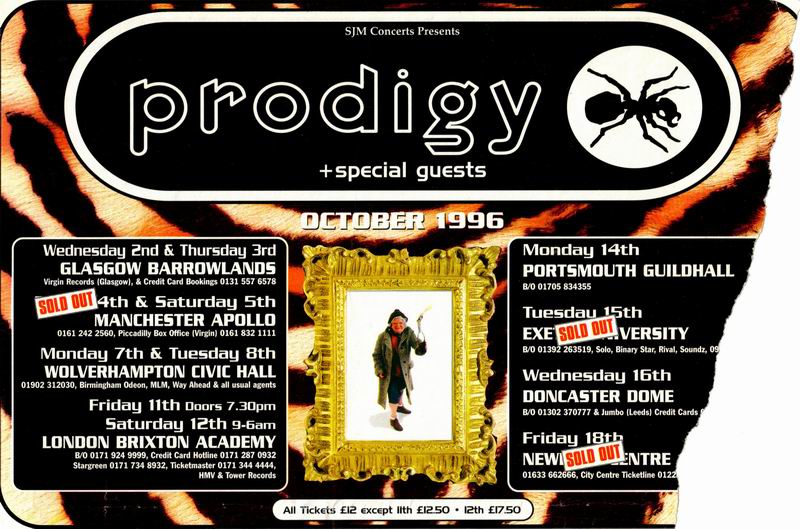

- Prodigy : An annotation tool powered by active learning. Made by the creator of Spacy. This really cool tool help you streamline the creation of models and quickly test hypothesis. Not free :( but to be honest, if you use spacy ( like I do ) and need to annotate model. The cost is probably worth it.

Labels:

continuous delivery

,

Kubernetes

,

links of the day

,

sql

Subscribe to:

Posts

(

Atom

)