- Grafana Loki : this is actually really cool, like Prometheus but for logs. It seems like a good light-weight alternative to elasticsearch.

- Google Cloud Adoption Framework : Google is trying to sell you its cloud. Good white paper anyway, describing a form of a cloud maturity model for the enterprise.

- Levels: If you ever wonder what a certain job title means and how it stacks vs other companies. Search no more! Levels is here to help you understand the intricate world of HR job title hierarchy.

A blog about life, Engineering, Business, Research, and everything else (especially everything else)

Thursday, December 13, 2018

[Links of the Day] 13/12/2018 : Prometheus for Logs, Cloud Adoption framework, HR job title comparison website

Labels:

cloud

,

google

,

hr

,

links of the day

,

logs

Tuesday, December 11, 2018

[Links of the Day] 11/12/2018 : Papers : Recognising disguised faces and deceiving NeuralNet with visuals illusions, and the dry history of liquid computers

- Recognizing Disguised Faces in the Wild : you can hide but not from the almighty computer. It is way more efficient to actually deceive in this case (see below).

- Convolutional Neural Networks Deceived by Visual Illusions : As already known, a neural net can be easily deceived. I think we are going to quickly enter a sort of arms race. Probably like that golden age of the virus/anti-virus software. Where we will see an ever more complex recognition and anti-recognition tools in the wild.

- The dry history of liquid computers : it's not all about silicon. You can make logic gates using fluids!

Thursday, December 06, 2018

[Links of the Day] 06/12/2018 : NLP summarisation, RPC protobuf framework, API security best practices

- Fast Abstractive Summarization with Reinforce-Selected Sentence Rewriting : I would have mentioned this paper on the sole basis that the authors provide a GitHub repo with all the code used. This should be mandatory for any publication in Computer science. Anyway, the summarization tech described in the paper is pretty cool too. [github]

- twirp : RPC framework with protobuf service definitions. If you don't want to go all gRPC, give this framework a serious look. I would consider twirp over gRPC for the sole reason that it uses the standard GO http server over the custom google one. Seriously google, why did you have to re-implement the HTTP server from your own language ??

- API Security Best Practices : documentations providing some good security practice when it comes GitHubhub usage. As well as an excellent leak management document describing a fairly efficient process.

Labels:

API

,

best practices

,

framework

,

grpc

,

NLP

,

rpc

,

security

,

summarisation

Tuesday, December 04, 2018

[Links of the Day] 04/12/2018 : Matrix cookbook, Serverless Containers, C++ network coroutine lib

- The Matrix Cookbook : nothing about Keanu Reeves in a kitchen apron but a collection of facts about matrices and matters relating to them. A very well documented desktop reference.

- Firecracker : Secure and fast microVMs for serverless computing... This is really another AWS product that makes you ask why do we need Kubernete. To be honest I really see K8s now as another OpenStack. It's at the top of the hype cycle but I don't really see it going anywhere further anytime soon. This type of tech enables serverless containers. Making any of the k8s almost a moot point.

- Coro async : C++ coroutine-based networking library

Labels:

C++

,

containers

,

Kubernetes

,

mathematics

,

matrix

,

virtualization

Thursday, November 29, 2018

[Links of the Day] 29/11/2018 : AWS Cloudformation testing , The Dassault model, Word2Vec Anything

- taskcat : Testing tools for AWS Cloudformation templates. Deploys your template in one or more regions and report if there is an issue.

- A Dassault Dossier : excellent Rand report on Dassault aviation and how the company was able to deliver the entire french aircraft combat fleet for the past two decades. It's all about development focus rather than production focus.

- Basilica : word2vec for anything. And the concept is amazing, seriously, you can pretty much get a similarity measure for any type of data.

Labels:

aws

,

cloudformation

,

dassault

,

word2vec

Tuesday, November 27, 2018

[Links of the Day] 27/11/2018 : AWS deployment workflow framework, Dockerize your dev workflow, Nuke your AWS account

- Odin : yet another AWS deployment solution. But this time with step function!! The concept is rather ingenious. And I wish we could literally script cloud formation deployment cycle using step function and lambda. There is probably a way using the WaitCondition and stuff. But it seems extremely convoluted. In the meantime kudos to Coinbase, I really like it and hope to adopt some of Odin mechanism myself.

- Binci : containerize development workflow with docker. Personally, I have used docker compose to do it. I have a little bit of an issue with npm/JS as the core language for these type of solution. But all in all, containerizing your development environment and workflow should be standard practice by now in the industry. Sadly it is isn't yet.

- cloud-nuke : want to wipe your AWS/Azure/GCP resources associated with an account, use cloud-nuke. Alternative solution: aws-nuke Nuka-Cola sold separately...

Thursday, November 08, 2018

[Links of the Day] 08/11/2018 : large scale study of datacenter network reliability, What to measure in production, Failure Mode effect Analysis

- A Large Scale Study of Data Center Network Reliability : the authors study reliability within and between Facebook datacenters. One of the key findings is the growth in complexity, heterogeneity and interconnectedness of datacenter increase the rate of occurrence of unwanted behaviours. Moreover, this seems to be also a key potential limiting factor for world scale spanning infrastructure undergoing rapid organic growth.

- Understanding Production: What can you measure? : what do you need to monitor and measure in production. Very good summary of many blog post out there.

- Failure Mode Effects Analysis (FMEA) : once you start reaching a certain production scale and more stringent requirement kicks in ( unless you were unlucky enough to have them at the get-go). You might want to run a failure modes and effects analysis (FMEA) is a step-by-step approach for identifying all possible failures in a design, a manufacturing or assembly process, or a product or service. While it was mainly designed to address shortcomings in the manufacturing industry, it is still extremely useful for IT system analysis, especially when you want to prepare yourself pre-rollout of a chaos monkey like system.

Labels:

analysis

,

chaos

,

datacenter

,

links of the day

,

measure

,

monitoring

,

network

,

production

,

reliability

Tuesday, November 06, 2018

[Links of the Day] 06/11/2018 : Intro to probabilistic programming, Unit tests for data, Ali Wong stand-up routing analysis

- An Introduction to Probabilistic Programming: a first-year graduate-level introduction to probabilistic programming. It not only provides a thorough background for anyone wishing to use a probabilistic programming system but also introduces the techniques needed to design and build these systems.

- deequ : library built on top of Apache Spark for defining "unit tests for data", which measure data quality in large datasets.

- Ali Wong structure of stand up comedy : fantastic and beautiful designed article analysing Ali wong stand up routine and how it is closer to a tv/movie script than a slapstick one-line joke comedy.

Labels:

comedy

,

data

,

links of the day

,

probabilistic

,

programming

,

unit test

Thursday, November 01, 2018

[Links of the Day] 01/11/2018 : multi-platform, multi-architecture CPU emulator framework , Periodic Table of Data Structures, Model-Based Machine learning book

- Unicorn : a qemu offshoot offering a lightweight multi-platform, multi-architecture CPU emulator framework.

- Periodic Table of Data Structures : the author try to express the different data structure used in computer science using a universal model. Once completed, formalized and validated this could be invaluable help for accelerating decision making processing for software architecture design. Now if somebody could do the same for software project management...

- Model-Based Machine learning book : this book is trying to teach you how to think like a statistician to solve some nontrivial analytical problems. Not many new books cover this skillset, and just for this alone, this book is a must-read for anybody using machine learning.

Labels:

book

,

data structure

,

emulation

,

links of the day

,

machine learning

,

qemu

Tuesday, October 30, 2018

[Links of the Day] 30/10/2018 : Python Object CLI generator, Alibaba Distributed File System, Microsoft API Design guideline

- Python-fire : library for automatically generating command line interfaces (CLIs) from absolutely any Python object

- PolarFS : Alibaba n Ultra-low Latency and Failure Resilient Distributed File System for Shared Storage Cloud Database. Keep an eye on this one, as the authors are planning to deliver a TLA+ proof soon. Moreover, I hope that they also run a benchmark against GPFS or Luster rather than Ceph. Ceph is not really competing in the same league.

- API design : pretty much the gold standard in API design. A must-read for anybody designing or using API.

Labels:

alibaba

,

API

,

distributed file system

,

links of the day

,

Microsoft

Thursday, October 25, 2018

[Links of the Day] 25/10/2018 : Distributed AI framework, Reverse proxy API gateway, AirBnB Change data capture service

- Ray : A Distributed Framework for Emerging AI Applications [Github]

- annon.api : Configurable API gateway that acts as a reverse proxy with a plugin system.

- SpinnalTap : Change Data Capture (CDC) service capable of detecting data mutations with low-latency across different data sources, and propagating them as standardized events to downstream consumers.

Labels:

ai

,

airbnb

,

API

,

change data capture

,

framework

,

gateway

,

links of the day

Tuesday, October 23, 2018

[Links of the Day] 23/10/2018 : bash history tool, Kubernetes security issue hunter, Kafka recovery toolkit

- hstr : Bash and Zsh shell history suggest box it allows you to easily view, navigate, search and manage your command history.

- Kube Hunter : an open-source tool that seeks out security issues in Kubernetes clusters. The objective is to increase awareness and visibility of the security controls in Kubernetes environments.[github]

- Kafka-Kit : set of tools for Kafka data mapping and recovery. Quite useful when you get into a pickle and you need to fix your Kafka topics.

Labels:

bash

,

kafka

,

Kubernetes

,

links of the day

,

tools

Thursday, October 18, 2018

[Links of the Day] 18/10/2018 : Rust conf 2018 videos, Financial modeling for startups, Baidu RPC framework

- bprc : Baidu enterprise-grade RPC. If you look at the performance, this looks really impressive. But, sadly most of the docs are in Chinese. I don't really know how committed to opensource and if Baidu is culturally attuned to run opensource community. But I think we need to keep a close look at all the recent opensource announcement from Chinese companies and see how they withstand the test of time. Last but not least, you might note that Chinese tends to prefer QQ over slack for discussion medium.

- Financial Modeling for Startups : This is an interesting laying out the core element of financial startup modelling. This can be of great help for entrepreneurs out there that want to wrap their head around the difficult financials aspect of creating and running a company.

- RustConf 2018 : video from Rust conf 2018

Labels:

baidu

,

financial modeling

,

links of the day

,

rpc

,

rust

Tuesday, October 16, 2018

[Links of the Day] 16/10/2018 : Cryptographic Library by Google, Rust CVS toolkit, Riselab Fast K/V store

- Tink : cryptographic software library by Google offering a multi-language, cross-platform cryptographic solution. [Github]

- xsv : A fast CSV command line toolkit written in Rust.

- Anna: from the AMPlab now RiseLab which brought you the ramcloud project and tons of other goodies such as the raft consensus protocol, Spark or Mesos, comes Anna a fast KV store. This project is really interesting because the folks at RiseLab are really doing advance applied research. Their result are also impressive as well as business grounded. By example, the K/V store provides 8x the throughput of AWS ElastiCache’s and 355x the throughput of DynamoDB which is already impressive. But it is even more when you realised that the comparison is made for a fixed price point.

Labels:

cryptography

,

csv

,

google

,

key/value store

,

links of the day

,

riselab

,

rust

Thursday, October 11, 2018

[Links of the Day] 11/10/2018 : Go powerline, Kubernetes context switcher, Notebook scaling at Netflix

- Powerline-go : a nice low latency PowerShell written in go. Give it a try.

- Kubernetes Context Switcher : another practical tool, allowing you to seamlessly switch between kubernetes context.

- Notebook @ netflix : Notebooks is now the default tool for data scientists. And Netflix shows how they are able to scale this tools to accommodate their ever-increasing data crunching needs.

Labels:

bash

,

datascience

,

golang

,

Kubernetes

,

links of the day

,

netflix

,

notebook

,

shell

,

tools

Thursday, September 27, 2018

[Links of the Day] 27/09/2018: data-centric internet master thesis, Critic of deep learning, Fairer machine learning

- Scalable mobility support in future internet architectures : MIT Master Thesis by Xavier K. Mwangi where he argues for a move away from host centric (IP) toward a data-centric approach where the naming and routing scheme revolves around object name resolution architecture. The argument behind this is to eliminate the tight coupling between location and data and allow a more fluid interaction, especially in an ever increasing mobile world.

- Deep Learning: A Critical Appraisal : Critical analysis of the recent deep learning revival and argumentation by the authors that deep learning will be soon replaced by other technique as we want to progress toward artificial intelligence.

- Delayed impact of fair machine learning : A paper that tries to answer the central question of fairness of machine learning algorithm. I.e. how to ensure fair treatment across demographic groups in a population when we let a machine learning system decide who gets an opportunity (e.g. is offered a loan) and who doesn’t.

Labels:

architecture

,

deep learning

,

internet

,

links of the day

,

machine learning

,

paper

,

thesis

Tuesday, September 25, 2018

[Links of the Day] 25/09/2018 : Investigating opaque algorithms, Good Go Makefile, Uber metric storage platform

- Investigating opaque algorithms : Eye-opening set of pieces of journalism that investigates, audits, or critiques algorithms in society. It is amazing how the impact of code is often overlooked while it impacts so many lives on a daily basis.

- A Good Makefile for Go : even with go you cannot escape the mighty makefile

- M3 : Uber metric platform, built as a storage backend for Prometheus. It handles all the compaction, compression and data aggregation so you don't have to pay these huge AWS bills at the end of the month because you have hyper scaled infrastructure that generates terabytes of metrics... Hoo wait you still do, but at least not because of your monitoring storage cost :) [github]

Labels:

algorithm

,

investigation

,

journalism

,

links of the day

,

metric

,

storage

,

uber

Thursday, September 20, 2018

[Links of the Day] 20/09/2018 : Arxiv paper viewer, Artificial intelligent atomic force microscope, What they don't teach you running a business by yourself

- Arxiv Vanity : If you are like me and read a lot of papers from Arxiv. This website will save you a ton of time. It allows you to render academic papers from Arxiv so you don't have to download or decipher the pdf. It makes life so much easier if you are on mobile and can't wait to read the latest paper on kitten deep learning recognition.

- Artificial Intelligent Atomic Force Microscope Enabled by Machine Learning : the authors demonstrate how you can use artificial intelligence with an atomic force microscope for pattern recognition and feature identification.

- Things they don’t teach you running a business by yourself : great short post on the different aspect of running a small business by yourself. If you want to start your own business, I would also advise reading "Start Small, Stay Small" - by Rob Walling and Mike Taber. It was an eye-opener. You don't have to go big with your business. Instead, you can run ten simultaneous businesses, diligently managing and tracking his time to run each one as efficiently as possible. It doesn't matter if one falters. This approach allows you to create a comfortable cushion and increase the chance of a higher payoff.

|

| by dahlig |

Labels:

academia

,

Artificial intelligence

,

business

,

links of the day

,

microscope

,

papers

,

viewer

Tuesday, September 18, 2018

[Links of the Day] 18/09/2018 : Data transfer project, Observability pipeline, and Operating systems Book

- Data Transfer Project : open-source, service-to-service data portability platform. Not really sure who would want to transfer data between facebook - google and Microsoft from a privacy point of view... But there is probably a use case.

- Veneur : distributed, fault-tolerant pipeline for observability data. This is a really cool project that allows to for aggregate metrics and sends them to downstream storage to one or more supported sinks. It can also act as a global aggregator for histograms, sets and counters. The key advantage of this approach is that you only maintain, store ( and pay for ) the aggregated data rather than the tons of separate data points.

- Operating Systems - Three Easy Pieces : free operating system book centred around three conceptual pieces that are fundamental to operating systems: virtualization, concurrency, and persistence

Labels:

book

,

data

,

data transfer

,

distributed system

,

links of the day

,

observability

,

operating system

,

pipeline

,

platform

Thursday, September 13, 2018

[Links of the Day] 13/09/2018 : Kubernetes in Docker, Forensic Diffing AWS image, Consistent File system on top of S3

- kind : Kubernetes-in-Docker - A single node cluster to run your CI tests against that's ready in 30 seconds

- diffy : Diffy allows a forensic investigator to quickly scope a compromise across cloud instances during an incident, and triage those instances for followup actions.

- Snitch: Box created a Virtually Consistent FileSystem build on top of S3. An interesting solution that allows Box to prevent data loss by building a consistent solution using an eventually consistent storage. Sadly not open sourced...

Labels:

consistency

,

docker

,

filesystem

,

forensic

,

Kubernetes

,

links

,

s3

,

security

Wednesday, September 12, 2018

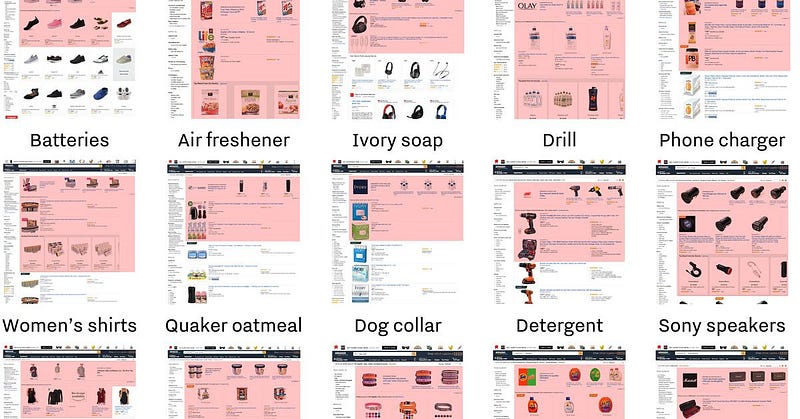

Amazon ads stuffing and upcoming “China Crisis.”

This article on #amazon stuffing its search results with ads reminded me of the tipping point when I did the switch from #altavista to #google search.

When the results are optimised to sell you something vs tuned to answer your search, the user starts to lose confidence in the service.

As David J. Carr suggested:

This behaviour is probably rooted in the tension between Bezo’s “I’m sceptical of any mission that has advertisers at its centrepiece” and Olsavsky’s “Our strategy is to make the customer experience additive by the ad process”.

Moreover, it indicates a switch from quantity over quality as they are probably willingly accepting that they can’t guarantee the quality & the authenticity of the products sold by the swarm of third-party vendors under the Amazon brand ( as in via Amazon website).

Last but not least, Brands and Sellers are increasingly competing with 3-week product lifecycle of pseudo-brands. Chinese producer can get to market a smartwatch lookalike within five days and using these self-serve ad platforms can outspend x100 at the bottom of the funnel.

As it becomes hard to compete with those Amazon is naturally increasingly banking on its logistics and distribution platform advantage. When the pseudo-brand life-cycle shorten the only common part of the product experience that will remain constant will be the delivery experience.

Tuesday, September 11, 2018

[Links of the Day] 11/09/2018 : Impact of legalizing Marijuana on housing market, Taleb's technical incerto

- Does legalizing retail marijuana generate more benefits than costs ? : the authors look at the impact of legalizing marijuana in Colorado and tries to quantify its impact by analysing the change in the housing market. Spoiler: it seems that there was a significant positive impact from the legalization of the house price due to a strong increase in housing demand.

- Nassim Nicholas Taleb technical Incerto: A mathematical parallel version of the Taleb's Incerto books

- The Statistical Consequences of Fat Tails: a look at fat tails and what they mean from different points of view as well as their real-life implications

- Silent Risk : provides a mathematical framework for decision making and the analysis of (consequential) hidden risks

- Convexity, Risk and Fragility : a look at Decision theory is not about understanding the world, but getting out of troubleand ensuring survival.

Labels:

economics

,

fat tail

,

housing market

,

links of the day

,

marijuana

,

Nassim Nicholas Taleb

,

risk

,

statistics

Tuesday, August 14, 2018

[Links of the Day] 14/08/2018: high-perf analytics database, Cloud events specs, Large scale system design

- LocustDB : Massively parallel, high-performance analytics database.

- CloudEvents Specifications : CNCF effort to create a specification for describing event data in a common way.

- System Design Primer : really cool set of document helping any developer to learn to design large-scale systems.

Labels:

cloud

,

database

,

events

,

links of the day

,

system design

Thursday, August 09, 2018

[Links of the Day] 09/08/2018 : Consciousness and integrated information, Optical FPGA, Events DB

- Making Sense of Consciousness as Integrated Information : in this papers, the authors argue that we currently have a dissociation between cognition and experience and that it might impact in the future in an hyper-connected world.

- Towards an optical FPGA : it look like programmable silicon photonic circuits is the next frontier in the hardware accelerator. Converting light into an electrical signal has rapidly become too expensive and modern CPU have a hard time coping with the pace of evolution of networking capabilities.

- TraildDB : tool for storing and querying series of events. Fast small efficient.

Labels:

consciousness

,

database

,

events

,

fpga

,

links of the day

Tuesday, August 07, 2018

[Links of the Day] 07/08/2018 : programming languages papers, terraform interactive visualization, self contained executable python file

- Papers on programming languages: papers about programming ideas from 70's for today

- blast-radius : Extremely useful tools allowing you to generate interactive visualizations of Terraform dependency graphs

- XAR : self-contained executable for python applications. It is a single, highly compressed files containing all necessary executable dependencies. It pretty much allows you to deliver the equivalent of a single golang binary but with python [Github]

Labels:

facebook

,

links of the day

,

programming languages

,

terraform

Thursday, July 26, 2018

[Links of the Day] 26/07/2018 : CEO book, Middle Income Trap, Brexit and the future of European Geopolitics

- The Great CEO Within : a good read on what it means to be a CEO and build up a company from scratch. It provides some good advice and hint of the challenges that not often talked about.

- Convergence vs. The Middle Income Trap: The Case of Global Soccer : a really good paper and eye-opening on the middle-income trap that nation can fall into the middle-income trap. The authors argue that the transfer of technologies, skills and best practices fosters this catch-up process. But there are limits and by analysing the soccer team progress over their history they demonstrate that like their economy these nations hit a plateau.

- Global Britain and the future of European Geopolitics: how Brexit is affecting the geopolitical landscape in Europe and it's relationship with the rest of the world (and especially Russia)

Labels:

brexit

,

ceo

,

economics

,

europe

,

geopolitic

,

income

,

links of the day

,

UK

Tuesday, July 24, 2018

[Links of the Day] 24/07/2018 : Physics of baking a good pizza, Stagnation of incomes despite Economic growth, Asciinema

- The Physics of baking good Pizza : when physicist tries to bake good pizza they write a paper about it.

- Stagnating median incomes despite economic growth : a look at the reason behind income stagnation despite an almost continuous economic growth for the past 20+ years. One of the worrying aspects is that it points out to an increase in wealth inequality which can lead to social unrest. Another interesting aspect is that the USA is a clear outlier as the wage growth was significantly lower than in OECD countries. This could provide a hint as to why this country is currently in such turmoil as the inequality gap has widened exponentially over the past couple of years.

- asciinema : record your terminal session and replay it .... the right way you can even convert your cast to GIF with asciicast2gif !

Labels:

ascii

,

economic growth

,

economics

,

income

,

links of the day

,

physics

,

pizza

Thursday, July 19, 2018

[Links of the Day] 19/07/2018 : High perf distributed data storage, Economics of Landmines and ARPA model

- Crail : high-performance distributed data store designed for fast sharing of ephemeral data in distributed data processing workloads

- The economics of landmines : economic paper on the effects of post-war landmine contamination and the influence on the population and economic society as they slowly get cleared up. I would guess that to some extent the same models and impact could be applied to toxic waste contamination area.

- ARPA Model : how the Advanced Research Projects Agency (ARPA) model works and how it has evolved since its inception in 1958. The authors also look at the challenge the agency is facing in the years to come.

Labels:

arpa

,

economics

,

landmines

,

links of the day

Tuesday, July 17, 2018

[Links of the Day] 17/07/2018 : Terraform collaboration tool, Cloud cost optimization, Oldschool NYC union negotiation

- Atlantis : extremely useful framework for managing terraform script that is shared and used within a team and across teams. It streamlines your whole operation by deploying terraform on pull request merge. Making the whole operations integration seamless with your CI/CD.

- Cloud Cost Optimization : Article expanding on Adrian Cockroft paper [ACM paper, Slide deck ] . It gives a good overview of the best practice out there for managing cloud cost and also skims on the many pitfalls of cost optimization. Like software development and the premature optimization fallacy, a lot of cloud customer spend way too much time trying to optimize cost early on with little to no effect. A good and quick read that can help you get in the right mindset when you need to set up a doctrine for controlling and improving your cloud cost management.

- Old School NYC Union negotiation : Wowww this video could have been straight out of a Scorcese mafia movie. But no, this is real life negotiation of NYC union... Which seems to goes like this: rambling ... Somebody talk loud and make a point while pointing finger.... Back to everybody arguing and ambient rambling.

Labels:

cloud

,

cost

,

links of the day

,

negotiation

,

nyc

,

optimization

,

terraform

Thursday, July 12, 2018

[Links of the Day] 12/07/2018 : MIT Career Handbook , Visual Introduction to Machine learning, Mysql HA @ github

- MIT Career Development Handbook : Really well-made handbook on career development by MIT.

- Visual Introduction to Machine Learning : this is a really intuitive introduction to machine learning with great visual. Making the whole process a breeze to understand the underpinning principles of machine learning.

- Github Mysql HA : How GitHub make it's Mysql highly available. There are many alternatives out there such as Galera. But Github decided to go its own way. My guess is that their scale requires specific characteristics that Galera and Al. can't deliver.

Labels:

career

,

github

,

high availability

,

links of the day

,

machine learning

,

MIT

,

mysql

Tuesday, July 10, 2018

[Links of the Day] 10/07/2018 : Exploring the ARPA model, Standalone Dockerfile and OCI image builder, Log based Transactional graph engine

- ARPA Model : why it has been good and still is to some extent. Interesting paper to read.

- img : Standalone, daemon-less, unprivileged Dockerfile and OCI compatible container image builder. If you want to safely build docker image in k8s or other environment but don't want a full docker setup. This is the tool for you. [blog]

- LemonGraph : Log-based transactional graph engine by NSA. Graph DBs are slowly percolating through the industry as a valuable tool to map connection between entities. And it's not surprising that Intelligence agency uses them.

Labels:

ARAP

,

dockerfile

,

funding

,

Graph engine

,

links of the day

,

OCI

Thursday, July 05, 2018

[Links of the Day] 05/07/2018 : Architecture of Open Source applications, Awesome CI , Searching for secrets in Git history

- Architecture of Open Source Applications : this is a fantastic set of books that go over the architecture of open sources application. From how programmers solve interesting problems in 500 lines of code (or less) to performance and architecture of open source project.

- Awesome-ci : awesome list of CI related articles, paper and tools.

- gitleaks : a useful tool that searches your git repo history for secrets and keys.. Sanitize all the things !

Labels:

awesome

,

continuous integration

,

git

,

links of the day

,

security

Tuesday, July 03, 2018

[Links of the day] 03/07/2018 : Information Theory introduction, Deep Reinforcement learning doesn't work (yet), Rust based Unikernel

- A Mini-Introduction To Information Theory : a Cool introduction to the concept. Nice and succinct.

- Deep Reinforcement Learning Doesn't Work Yet : There is a lot of buzzes around using machine learning for health care. In the UK the prime minister just announced a massive program to leverage AI to help the NHS tackle cancer and other ailments. However, as this paper suggest. We are a very long way from having a practical solution. And I feel that there is a little bit too much hype and with that fund diverted from solutions that will actually benefit the overstretched health care system now. I do not say that ML will not work, just that there is better spending than throwing IT stuff at it.

- A Rust-based Unikernel : love unikernel tech .. and now we have a nice Rust

Thursday, June 28, 2018

[Links of the Day] 28/06/2018 : Operational CRDT & causal trees, the story ISPC and Larabee compiler, Limitation of gradient descent

- Causal Trees& Operational CRDTs : Educational project showing how to use CRDT for real-time document sharing and editing.

- The story of ispc : Intel Larrabee compiler history. It seems that Intel missed the mark there, and was significantly too early for the deep learning onslaught. It seems that to some extent that the ISPC model would have significantly bridged the gap between GPU and CPU for machine learning computation.

- The limitations of gradient descent as a principle of brain function : looks like emulating more complex brain function will not work by using gradient descent methods. While this strategy was quite successful for deep learning it seems that there is some inherent limitation to a more generic brain functions emulation as the authors describe.

Labels:

compiler

,

crdt

,

gradient descent

,

links of the day

Tuesday, June 26, 2018

[Links of the Day] 26/06/2018 : How economist got Brexit wrong, Driving data set, CRDT @ redis

- How the economics profession got it wrong on Brexit : Economist got the economy wrong... News at 11 .. Anyway, it's a very good analyse of the pitfalls that the various group fell into. And a good read to get a better understanding of the UK economy and how to reacts to large socio-economic events.

- BDD100K : want data for your driverless car ?? Berkeley got you covered. [data][paper]

- CRDT @ redis : I love CRDT and this talk about their use in Redis.

Labels:

brexit

,

car

,

crdt

,

data

,

economics

,

links of the day

,

machine learning

,

redis

Thursday, June 21, 2018

[Links of the Day] 21/06/2018 : TCP's BBR , Hierarchical convoluted neural network, The Government IT self-harm playbook

- BBR : BRR seems like a great alternative to CUBIC or RENO for server-side optimization. Even if you have to be a little bit careful if you run in a mixed environment as a server running BBR will literally asphyxiate other server running CUBIC within the same environment.

- Tree-CNN : the authors describe a Hierarchical Deep Convolutional Neural Network and demonstrate that they are able to achieve greater accuracy with a lower training effort versus existing approach.

- Government IT Self-Harm Playbook : this is a must-read for anybody in IT, be a small or large corporation, private or public organization. To be honest I can see these type of mistakes happening all over the corporate world. It's easier to spot them in large corporation undergoing "digital transformation". Anyway, read it, learn from it.

Labels:

doctrine

,

government

,

IT

,

links of the day

,

networking

,

neural networks

,

tcp

Tuesday, June 19, 2018

[Links of the Day] 19/06/2018 : Facebook network balancer, Open policy agent, Intel NLP libs

- OPA : an open source policy agent that decouple policy from actual code logic. This is essential to provide great flexibility with fine-grained control of resources. These kinds of features are a key building block for secure and robust API based solution. [github]

- Katran : facebook scalable network load balancer. It relies on eBPF and XDP from the Linux kernel to deliver impressive performance at low-cost thanks to its capability to run on off the shelf hardware. [github]

- NLP Architect : Intel NLP library and solution. Sometimes I feel that Intel has some great hardware and software but the release cycle is rather decoupled. Which often leave the user in an odd situation, where the hardware is out but the software is not there yet. And sometimes it's the opposite. I really feel that Intel should work on this. Maybe externalise the software to a separate entity as the hardware culture might be impeding the software side of the company.

Labels:

ebpf

,

facebook

,

intel

,

links of the day

,

networking

,

NLP

,

policy

,

xdp

Thursday, June 14, 2018

[Links of the Day] 14/06/2018 : GDPR documentation template, Survey of Vector representation of meanings, Supervised learning by quantum neural networks

- A Survey on Vector Representations of Meaning : the papers present an overview of the current state of word vector model research space. The survey is quite useful when you need to choose a vector model for your NLP application as each model comes with different tradeoffs.

- EverLaw GDPR documentation Template: Highly practical and down to earth document helping you classify your current status regarding GDPR and understand what exposure you have to it. To some extent, this is almost a must fill the first step for any company out there that deals with individuals information.

- Supervised learning by Quantum Neural Networks: what's better than neural networks? Quantum neural networks !!!

Labels:

gdpr

,

law

,

links of the day

,

neural networks

,

NLP

,

quantum

,

supervised learning

Tuesday, June 12, 2018

[Links of the Day] 12/06/2018 : Type checking for Python, Golang Web scrapper , Google Style Guide

- Pyre : Fast Type Checking for Python by Facebook crowd. Written in Ocaml

- Colly : web scrapper and crawler framework in Golang. I really like Scrappy but I think colly has some good potential. Even if often speed is not the main characteristic of scrappers. Actually, you really want to have good rate limiting mechanism if you want to avoid crashing the website you scrap

- Google Style Guides : All style guide for the different programming languages used at Google

Labels:

facebook

,

google

,

links of the day

,

python

,

scrapper

Thursday, June 07, 2018

[Links of the Day] 07/06/2018 : Quantum algo for beginners, Dynamic branch prediction and Running Python in Go

- Quantum Algorithm Implementations for Beginners : this paper present a lot of the basic algorithm used for quantum computation. It's a good start if you want to check out what quantum computer can do and test it on a real one!

- A Survey of Techniques for Dynamic Branch Prediction : with all the spectre and meltdown attack, this paper is a good refresher on what is dynamic branch prediction, how it works and why we need these techniques.

- Cgo and Python : When you want to run python in your go, and you realise that the python threading model is still a pain in the $"!$*(^!& .

Labels:

algorithm

,

branch prediction

,

golang

,

links of the day

,

papers

,

python

,

quantum

Tuesday, June 05, 2018

[Links of the Day] 05/06/2018: All about kubernetes - kops and descheduler

Today is all about k8s

- Kops : Production Grade K8s Installation, Upgrades, and Management

- Kops terraform : HA, Private DNS, Private Topology Kops Cluster all via terraform on AWS VPC

- Descheduler : this aim at solving the issue of overprovisioning nodes with k8s. This descheduler checks for pods and evicts them based on defined policies. Ideally, these policies aim at maximising resource usage without compromising availability.

Labels:

Kubernetes

,

links of the day

,

schedulers

Microsoft aim at undercutting AWS strategic advantage with its Github acquisition

Microsoft acquired Github code sharing platform. This is a brilliant move. It allows Microsoft to offset some of the insane advantages that AWS gained over the last couple of year via its innovate, leverage, commoditise strategy.

|

| ILC model by Simon Wardley |

ILC relies on the following mechanisms: the larger the ecosystem, the higher the economy of scale, the more users, the more products being built on to of it, and the more data gathered. AWS continuously use this data trove to identify patterns and apply it to determine what feature they are going to build and commoditise next. The end goal is to offer more industrialised components to make the entire AWS offer even more attractive. It's a virtuous circle, even if sometimes AWS cannibalise existing customer product and market share on the way. Effectively, AWS customers are AWS R&D department that feedback information into the ecosystem.

As a result, AWS methodically eat away at the stack by standardising and industrialising components built on top of their existing offer. It further stabilises the ecosystem and enables them to tap further into the higher level of the IT value chain. As a result, AWS can reach more people while organically growing their offer at blazing speed with minimal risk. Because, apparently, all these startups are taking all the risks instead of AWS.

How does Microsoft acquisition play into this? Well, Microsoft with its Azure platform is executing a similar play to the one that AWS is delivering. However, Microsoft has a massive gap to bridge to catch up to AWS. And the difference is widening at incredible speed as the economy of scale offers an exponential advantage. AWS has a significant head start in the ILC game, which confers them a massive data collection advantage over its competitor. However, Microsoft can hope to bridge that gap by directly undercutting AWS and instantly tap into the information pipeline coming from GitHub. By doing so, Microsoft can combine the information coming from its Azure platform with Github. Providing them with an invaluable insight that combines actual component usage and developers interest and use. Moreover, this will also offer valuable insight into AWS, and other cloud platforms as a majority of projects ( opensource or not) deploying onto these are hosted on Github.

|

| Cloud Wardley Map with Github position |

I quickly drew the Wardley map above to demonstrate how smart the acquisition of Github is. You can clearly see how the code sharing platform enables Microsoft to undercut AWS strategic advantage by gaining ecosystem information straight from the developers and the platforms above. As Ballmer once yelled: Developers, developers, developers!

Thursday, May 31, 2018

[Links of the Day] 31/05/2018 : Testing Distributed Systems, Quantum Supremacy , Togaf Tool

- Testing distributed systems : Curated list of resources on testing distributed systems. Thre is no silver bullet, just sweat, blood and broken systems.

- The Question of Quantum Supremacy : Google folks are trying to determine the smaller smallest computational task that is prohibitively hard for today’s classical computers but trivial for quantum computer. This is the equivalent of hello world for a quantum computer and is critical to validate quantum computer capability and correctness.

- Archi : open source modelling tool to create ArchiMate models and sketches. If you ever look at TOGAF or use the enterprise architecture principle, this tool is for you.

Labels:

Distributed systems

,

enterprise architecture

,

links of the day

,

quantum

,

testing

,

togaf

Wednesday, May 30, 2018

The curse of low-tech Scrum

I recently read the following article that describes how scrum disempowers devs. It criticises the "sell books and consulting" aspect that seems to have become the primary driver behind the Agile mantra. Sadly, I strongly agree with the authors' view.

Scrum brings some excellent value to the technical development process such as :

- Sprints offer a better way to organise than Waterfalls.

- Force to ship functional products as frequently as possible to get feedback early and often from the end user.

- Requires stopping what you're doing on a regular basis to evaluate progress and problems.

However, Scrum quickly spread within the tech world as a way for companies to be "agile" without too much structural change. First, Scrum does not require technical practices and can be installed in place at existing waterfall companies doing what is effectively mini-waterfall. Second, such deployment generates little disruption to the corporate hierarchy (and this is the crux of the issue). As a result, Scrum allows managers and executives to feel like something is being done without disturbing the power hierarchy.

Even though the method talks about being flexible and adapting when there are real business needs to adjust to. The higher level of corporation rarely adjusts this approach which relegates scrum to allow companies to move marginally in the direction of agility and declare "mission accomplished". Agile providing a low-tech placebo solution to an organisational aspiration.

Last but not least adopting a methodology for the sake of it is often doomed to fail. If you have a customer that needs a new thing built by a specific date. Then scrum is less than ideal as it requires the flexible date and profoundly involved customer stakeholders in the process. The waterfall approach would be a better choice as it forces to define the project up front and allows for calling out changes to the plan and thus changes the scope.

It is often disappointing to see claims by consulting firms that organisation needs to adopt agile. It's a piecemeal solution that will only temporary mask deeper organisational problems without the required correct structural change. It's not because your dev teams started to use agile or devops that your organisation as a whole suddenly became agiler.

Don't misunderstand this blog post as a complete rejection of the principle of scrum and agile. It's not. The core ideas are awesome and should be adopted where it suits. Other methodologies such as waterfall, devops, etc.. have also their place in an organisation depending on the lifecycle stage of the products. However, these need to be adopted alongside organisational change beyond the devs teams to improve the overall operations and efficiency of the company. Without these, it's just a low-tech placebo.

Tuesday, May 29, 2018

[Links of the Day] 29/05/2018 : Tracers performance, Testing Terraform , Virtual-kubelet

- Benchmarking kernel and userspace tracers : a good recap of what tracing toolkit is out there and the performance tradeoff that comes with them

- terratest : this is the thing I was looking for, a way to test and validate my terraform script. This will really help the adoption of Terraform I think as it will significantly increase the confidence in Terraform code before deployment.

- Virtual-Kubelet : that s a really cool concept, and introduce a great dose of flexibility in your Kubernetes cluster deployment. There is already some really exciting solution leveraging it such as the AWS fargate integration. With this, you could implement easily bursting and batching solution or real hybrid k8s solution with virtual kubelet hosted in Azure, Aws and on your private cloud.

Labels:

infrastructure

,

Kubernetes

,

links of the day

,

terraform

,

testing

,

tracing

Thursday, May 24, 2018

[Links of the day] 24/05/2018 : TLA+ video course, Pdf Generator, Quantum Algorithms Overview

- The TLA+ Video Course : if you ever had to design a distributed system and spend the sleepless night thinking about edge cases TLA+ is a godsend. You just spec your system & go. It also gives a huge decrease in cognitive load when you're implementing your system against a TLA+ spec. The hard stuff is already done. You can just glance at the spec to see what preconditions must be checked before an action is performed. No pausing halfway through writing a function as you suddenly think of an obscure sequence of events that breaks your code.

- ReLaXed : generate PDF from HTML. It supports for Markdown, LaTeX-style mathematical equations, CSV conversion to HTML tables, plot generation, and diagram generation. Many more features can be added simply by importing an existing JavaScript or CSS framework.

- Quantum algorithms: an overview : survey some known quantum algorithms, with an emphasis on a broad overview of their applications.

Labels:

algorithm

,

Distributed systems

,

links of the day

,

pdf

,

quantum

,

tla

,

verification

Monday, May 21, 2018

[Links of the Day] 21/05/2018 : Automation and Make, FoundationDB, Usenix NSDI18

- Automation and Make : this is a really good description of best practice for Makefile and automation.

- FoundationDB : Apple open source it's distributed DB system, another contender enters the fray. With Spanner on google cloud, CockroachDB and now FoundationDB. The Highly resilient distributed transactional system start to reach widespread usage. [Github]

- Usenix NSDI 2018 Notes: a very good overview of NSDI conference, and naturally the morning paper is currently doing a more in-depth analysis of the main papers. [day 2&3]

Labels:

apple

,

conference

,

database

,

links of the day

,

usenix

Friday, May 18, 2018

Hedging GDPR with Edge Computing

Cloud has drastically changed the way companies deal with data as well as compute resource. It is no more constraint by tedious and long procurement process and offers unparalleled flexibility. The next wave of change is currently taking shape. A combination of serverless solution offering ever more flexibility coupled with more significant financial control and at at the edge, where the amount of data, the complexity of applications are driving requirements for local options.

IoT and AR/VR are the two obvious applications driving enterprise to the edge because of their use of complicated and expensive solution coupled with humongous performance requirement such as extra low latency with no jitters.

However other reasons behind edge computing start to emerge and will probably attract more traditional enterprise because of the advantage conferred by the ultra localisation of data and compute solutions.

GDPR has the potential to accelerate edge computing adoption. Edge Computing can offer hyper localisation of data storage as well as processing. These features ticks many boxes of the regulatory requirements. With the boom in personal data being generated via the ever-increasing number of consumer devices, like smart watches, smart cars and homes, there are the ever-looming potentials, for a company, to expose themselves to GDPR infractions. Not to mention data ownership, and responsibility can also be a tricky question to answer, for example, who is responsible for the data – the consumer, the watch provider or the vendor?

One of the solutions delivered by edge computing would be to store and process data onsite within the local premise or a delimited geographical perimeter. It would not only offers greater access and guaranteed control. But also enable hyper localisation and regulatory compliance.

Hence, there is a significant potential market for future Edge computing provider to offer robust regulatory and compliance solution. Look at gaming servers and underage data protection, HR or healthcare information. There is a vast trove of customers that will now see a way of leveraging cloud-like models while maintaining tight geographical and regulatory constraints. One potential would be to offer a form of reverse take over, or merger: Edge computing providers would be invited to leverage existing on-premise infrastructure and turn them into cloud-like serverless solution with strong compliance out of the box. It will allow companies to benefit at low cost from cloud-like flexibility while offering robust regulatory compliance via explicitly exposing and constraining storage and compute operations to specific locations.

Last but not least edge computing providers will be able to facilitate access to local data or processing capability on demand to third parties while having the capability to enforce robust compliance. Opening an entirely new market for market compliant brokerage. By example, customers can allow access to data or extract metadata from the vendor back to the watch provider or its own medical insurance company. All these interactions being mediated (and charged) by the edge computing provider.

By becoming the custodian of data at the edge, Edge computing provider can build a two-sided market. Serving data generator, customers, individuals, organisations, aka data issuers and issuer processor one side. Also, on the other side, merchants, advertisement companies, insurance, etc... aka acquirers and acquirers processor. Edge computing provider would facilitate the transactions between issuer and acquirer while enabling hyper-local and compliant solutions. A little bit like visa but for data and compute.

Labels:

cloud

,

compliance

,

gdpr

,

regulation

,

serverless

Subscribe to:

Posts

(

Atom

)